The Huawei Technologies’ lab in charge of large language models (LLMs) has defended its latest open-source Pro MoE model as indigenous, denying allegations that it was developed through incremental training of third-party models.

Advertisement

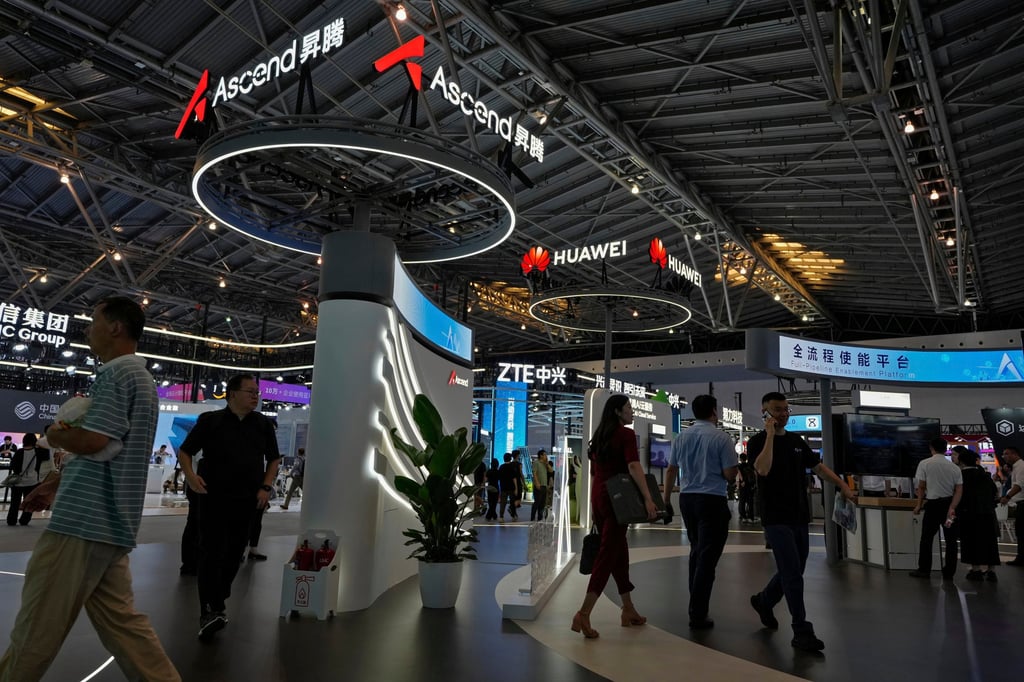

The Shenzhen-based telecoms equipment giant, considered the poster child for China’s resilience against US tech sanctions, is fighting to maintain its relevance in the LLM field, as open-source models developed by the likes of DeepSeek and Alibaba Group Holding gain ground.

Alibaba owns the South China Morning Post.

Huawei used an open-sourced artificial intelligence (AI) model called Pangu Pro MoE 72B, which had been trained on Huawei’s home-developed Ascend AI chips. However, an account on the open-source community GitHub, HonestAGI, on Friday alleged that the Huawei model had “extraordinary correlation” with Alibaba’s Qwen-2.5 14B model, raising eyebrows among developers.

Huawei’s Noah’s Ark Lab, the unit in charge of Pangu model development, said in a statement on Saturday that the Pangu Pro MoE open-source model was “developed and trained on Huawei’s Ascend hardware platform and [was] not a result of incremental training on any models”.

The lab noted that development of its model involved “certain open-source codes” from other models, but that it strictly followed the requirements for open-source licences and that it clearly labelled the codes. The original repository uploaded by HonestAGI has gone, but a brief explanation remains.