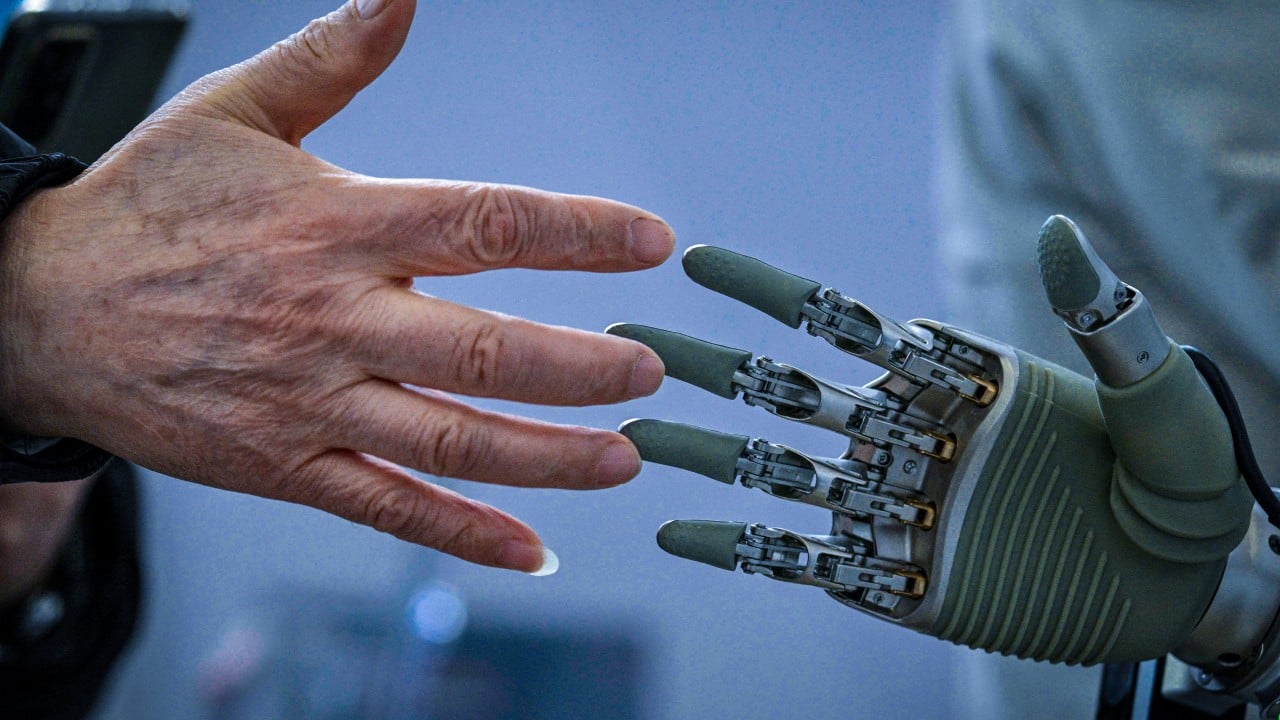

A major barrier to access to justice, not just in Hong Kong but around the world, is the high cost of legal services. It may come as no surprise, then, that lawyers are a prime target for competition by artificial intelligence (AI) systems, particularly those systems that rely on large language models.

Advertisement

As early as 2015 in the United States, simple apps were developed that used AI to contest parking tickets or automate basic legal processes like filing forms. By 2023, it was reported that OpenAI’s large language model, GPT-4, had passed the Uniform Bar Exam.

Is it possible that barristers in court could one day be entirely replaced by AI systems with the ability to think and reason just like a human lawyer? The answer is not so simple.

The law is often thought of as a system of logical rules, which would make its automation by AI particularly appealing. Unbiased machines ruled only by logic should be able to achieve a result that is perfectly just and free from error or outside influence.

Large language models, in particular, which use the mathematics of probability to break down and reconstruct the logic of human language, seem especially suited for such a task. In some respects, that is certainly true, and so-called “AI lawyers” have already been deployed by law firms across the world for more complex tasks such as drafting contracts and reviewing documents.

Advertisement

While the AI legal revolution has begun, it is not without its early hiccups. The increasing use of AI models by practising lawyers, such as for generating legal submissions, has thrown the spotlight on AI’s tendency to “hallucinate”.