The bill would have required testing of AI models to ensure they don’t lead to mass death, attacks on public infrastructure, or cyberattacks.

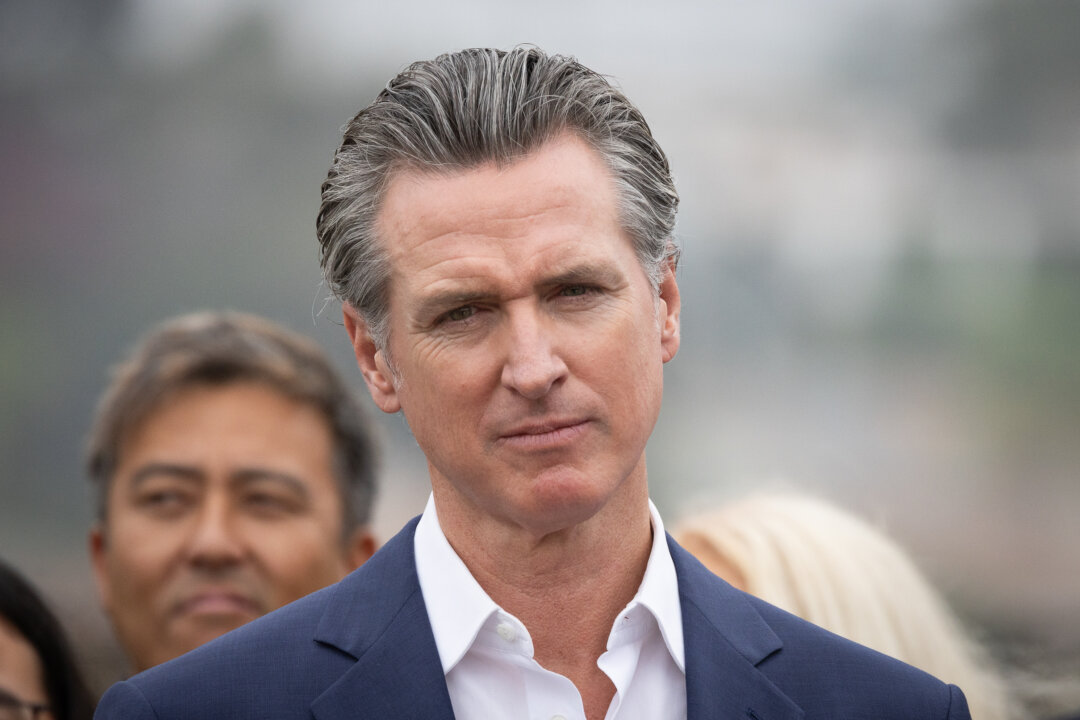

California Gov. Gavin Newsom on Sept. 29 vetoed a heavily debated bill to regulate artificial intelligence (AI) approved by the Legislature in August.

Senate Bill 1047, the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act, raised the stakes in the debate over AI regulations.

If Newsom had signed the bill into law, it would have required testing of AI models to ensure they don’t lead to mass death, attacks on public infrastructure, or cyberattacks.

The legislation would have also created whistleblower protections, as well as a public cloud for the development of AI for the public good.

SB 1047 would have also created the Board of Frontier Models, a California state entity, to monitor the development of AI models.

Newsom thoroughly scrutinized the bill in his veto, noting that it risks “curtailing the very innovation that fuels advancement in favor of public good.”

He also said the bill regulates AI in a blanket fashion and lacks empirical analysis of the real threats posed by AI.

“A California-only approach may well be warranted—especially absent federal action by Congress—but it must be based on empirical evidence and science,” he wrote.

Newsom also took issue with the fact that the bill applies mainly to expensive AI models—those that cost more than $100 million to develop or require more than a certain quantity of computing power to train. In the governor’s view, inexpensive AI models could pose just as much of a threat to the public good or critical infrastructure.

“Smaller, specialized models may emerge as equally or even more dangerous than the models targeted by SB 1047,” Newsom wrote.

The governor said the legislation could slow down advancements in the interest of the public good. For that reason, he said, SB 1047 could give the public “a false sense of security” when it comes to AI.

Additionally, Newsom said SB 1047 fails to consider whether an AI model is deployed in high-risk environments or uses sensitive information.

“Instead, the bill applies stringent standards to even the most basic functions—so long as a large system deploys it,” Newsom wrote. “I do not believe this is the best approach to protecting the public from real threats posed by the technology.”

Newsom said that he believes California must pass a law to regulate AI and that he remains “committed to working with the Legislature, federal partners,” and others in the state and nation to “find the appropriate path forward, including legislation and regulation.”

San Francisco Democratic Sen. Scott Wiener, the bill’s author, said the veto was “a missed opportunity for California to once again lead on innovative tech regulation” and “a setback for everyone who believes in oversight of massive corporations that are making critical decisions” regarding the use of AI.

Supporters of the bill include Elon Musk, AI startup Anthropic, the Center for AI Safety, tech equity nonprofit Encode Justice, the National Organization for Women, and whistleblowers from AI company OpenAI.

Elon Musk’s record of criticism for California lawmakers made his endorsement of SB 1047 a surprise.

“This is a tough call and will make some people upset, but, all things considered, I think California should probably pass the SB 1047 AI safety bill,” he posted on X, the social media platform he owns. “For over 20 years, I have been an advocate for AI regulation, just as we regulate any product/technology that is a potential risk to the public.”

Other supporters include Service Employees International Union, the Latino Community Foundation, and SAG-AFTRA.

An online poll conducted by the Artificial Intelligence Policy Institute from Aug. 4 to Aug. 5 shows that 65 percent of the 1,000 California voters who responded support SB 1047. The survey has a margin of error of 4.9 percentage points.

Opponents of the bill included tech behemoths such as Google, Meta, and OpenAI, who said the bill would undermine the California economy and the AI industry.

Several members of the California congressional delegation asked Newsom to veto the bill before the California Legislature passed it last month in a landslide vote.

The California Chamber of Commerce celebrated Newsom’s veto.

“We are grateful to Governor Newsom for the veto of SB 1047. Regulatory efforts to promote #AI safety are critical, but SB 1047 missed the mark in key ways,” Chamber President and CEO Jennifer Barrera wrote. “As a consequence, the bill would have stifled AI innovation, putting California’s place as the global hub of innovation at tremendous risk.”

In September, Newsom signed about a dozen bills on various AI regulations. One such bill, Assembly Bill 2655, protects voters from deep fakes and people from the use of their likeness by others without consent.

Other bills require businesses to share information about how they use data to train generative AI models and to supply tools so consumers can see whether or not a certain piece of media is made by humans or AI.

The SB 1047 veto derails the California Legislature’s efforts to align the state’s AI regulation with the European Union’s AI Act.

In September 2023, Newsom issued an executive order instructing California agencies to perform risk assessments of the threats and vulnerabilities AI poses to California’s critical infrastructure.

The U.S. A.I Safety Institute, under the National Institute of Science Technology, is creating guidance on security risks. Newsom said the federal agency’s approach entails “evidence-based approaches” to safeguarding the public.