Huawei Technologies has unveiled a software tool designed to accelerate inference in large artificial intelligence models, an advancement that could help China reduce its reliance on expensive high-bandwidth memory (HBM) chips.

Advertisement

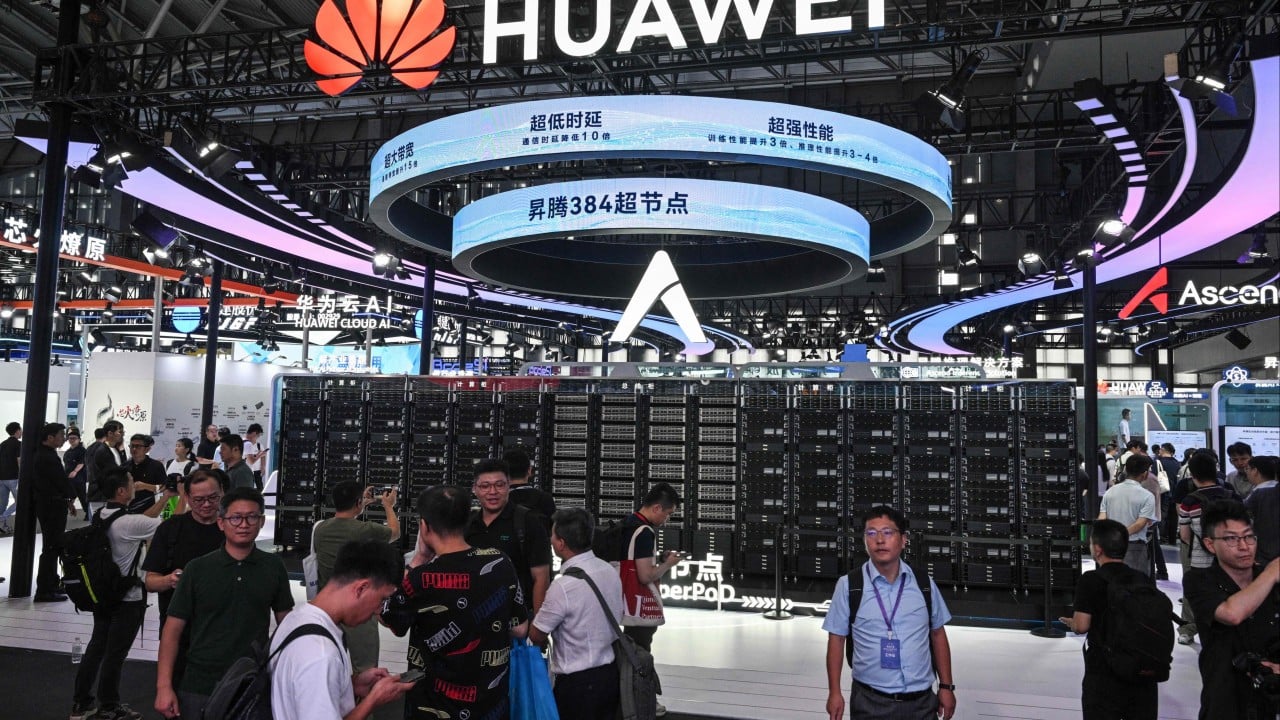

Unified Cache Manager (UCM) is an algorithm that allocates data according to varying latency requirements across different types of memories – including ultra-fast HBM, standard dynamic random access memory and solid-state drive – thereby enhancing inference efficiency, according to Huawei executives at the Financial AI Reasoning Application Landing and Development Forum in Shanghai on Tuesday.

Zhou Yuefeng, vice-president and head of Huawei’s data storage product line, said UCM demonstrated its effectiveness during tests, reducing inference latency by up to 90 per cent and increasing system throughput as much as 22-fold.

The move exemplifies how Chinese tech firms are leveraging software improvements to compensate for limited access to advanced hardware. Earlier this year, Chinese start-up DeepSeek captured global attention by developing powerful AI models with constrained chip resources.

Huawei plans to open-source UCM in September, first in its online developer community and later to the broader industry. The initiative could help China lessen its dependence on foreign-made HBM chips, a market mostly controlled by South Korea’s SK Hynix and Samsung Electronics, as well as the US supplier Micron Technology.

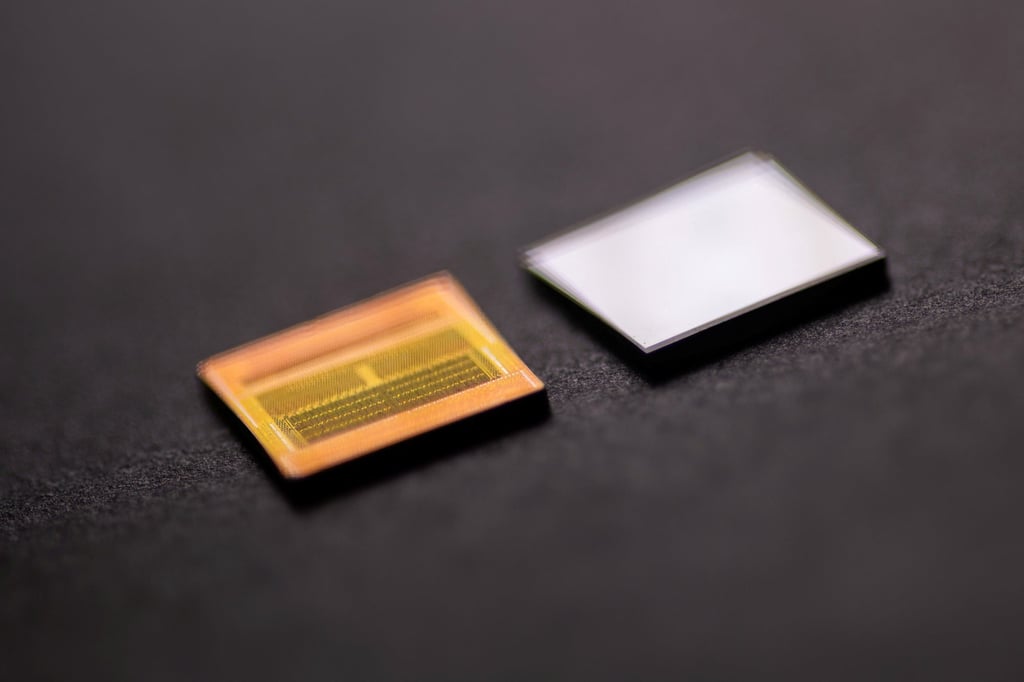

HBM is a stacked, high-speed, low-latency memory that provides substantial data throughput to AI chips, enabling optimal performance. The global HBM market is projected to nearly double in revenue this year, reaching US$34 billion, and is expected to hit US$98 billion by 2030, largely driven by the AI boom, according to consulting firm Yole Group.

Advertisement